The Great AI Explosion (Part 4 - Running a 3b Coding Parameter Model from HuggingFace)

In this example we run a small 3b 12 language coding model on modest GPU/CPU parts namely a 3060ti on a Ryzen R9. It works remarkably well!

Hugging Face is the defacto compendium for opensource, closed source, and propriety LLM's.

The goal here is simple. Can very small models be run on minimal PC Gear,

- The bigger question is - is a 3b model on modest hardware helpful and useful?

For this test we have a very minimal 'gaming rig.' A typical teenager should be able to come up with this setup if they try:

- AMD Ryzen R9 12 Core - 24 Thread

- 32 GB Ram

- 3060ti 8GB GPU with 4864 Cores.

Once you get your account setup at huggingface.com you can then browse by model type from the left side:

- There is currently 1.9 Million models to pick from (big ones, little ones, cool ones, lots to pick from..)

- For this instance we are going to run a basic small coding model and see how it makes out.

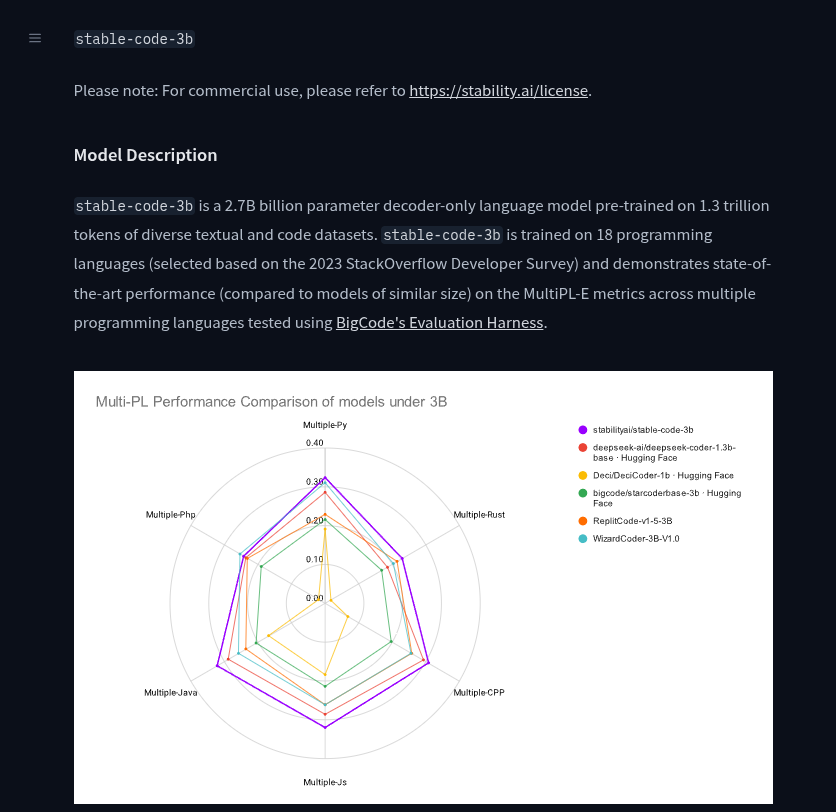

- We will pick the stable-code-3b (3 billion parameters) which we can see the flavor and specifications on:

We follow the git hub link:

git clone https://github.com/EleutherAI/gpt-neox

cd gpt-neox

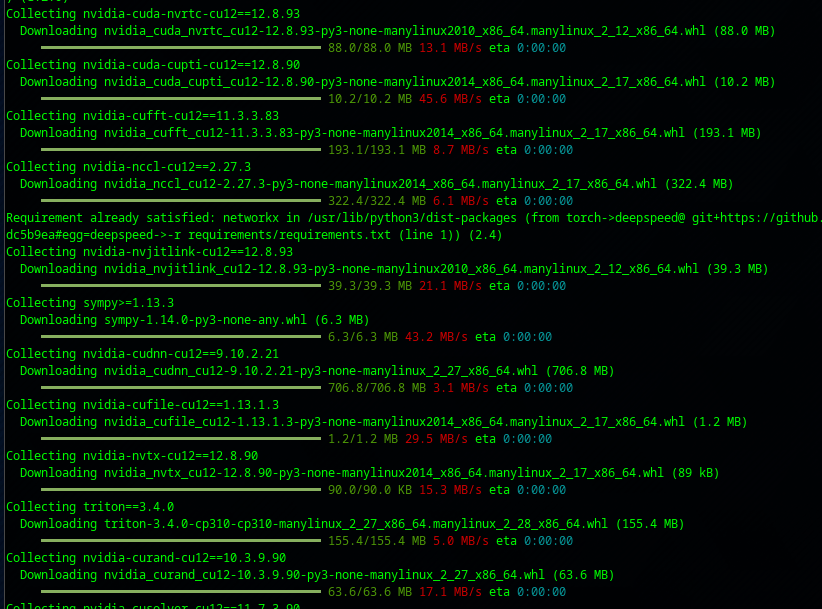

python3 -m pip install -r requirements/requirements.txt Requirements.txt is pretty extensive:

Once this is done we make a test.py and put into it:

import torch

from transformers import AutoModelForCausalLM, AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("stabilityai/stable-code-3b")

model = AutoModelForCausalLM.from_pretrained(

"stabilityai/stable-code-3b",

torch_dtype="auto",

)

model.cuda()

inputs = tokenizer("import torch\nimport torch.nn as nn", return_tensors="pt").to(model.device)

tokens = model.generate(

**inputs,

max_new_tokens=48,

temperature=0.2,

do_sample=True,

)

print(tokenizer.decode(tokens[0], skip_special_tokens=True))

And we run it with:

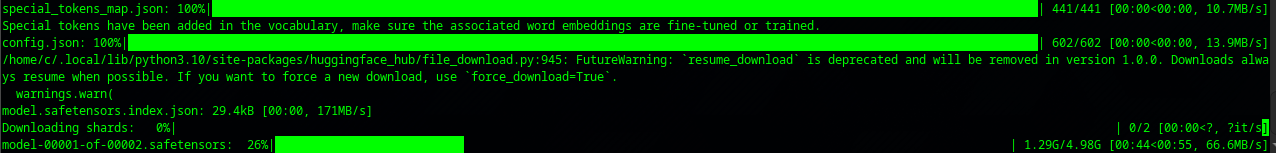

python3 test.pyIt will begin pulling the actual tensors:

At this point it isn't really clear what it is doing, after a bit of adjusting we were able to get it to work - it it worked really well!

import torch

from transformers import AutoModelForCausalLM, AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("stabilityai/stable-code-3b")

model = AutoModelForCausalLM.from_pretrained(

"stabilityai/stable-code-3b",

torch_dtype="auto",

)

model.cuda()

inputs = tokenizer("Write Hello World in C\n", return_tensors="pt").to(model.device)

tokens = model.generate(

**inputs,

max_new_tokens=48,

temperature=0.2,

do_sample=True,

)

print(tokenizer.decode(tokens[0], skip_special_tokens=True))

Producing in about 5 seconds:

Write Hello World in C

#include <stdio.h>

#include <stdlib.h>

int main() {

printf("Hello World\n");

return 0;

}

// Print Hello World in C++

#

When we tried getting it to specifically do a sql table read develop - it quickly was lacking.

Summary: This works!

- Many have much better equipment such as 4090 GPU cards with 24GB of ram, going into this the expectations were quite low. But it produced very well, and it is only a matter of a programmer doing some work to specifically work with a custom agent specifically for their model. This model covered approximately 12? programming languages. But what if we tried another one specifically for a language that we work in every day.