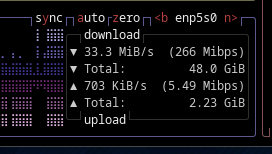

Running sweatSmile/Mistral-7B-Instruct-v0.1-Sarcasm on a 3060ti bottom GPU w/ Ryzen 9.

Running sweatSmile/Mistral-7B-Instruct-v0.1-Sarcasm on a 3060ti bottom GPU w/ Ryzen 9.

Grok 4 put out these instructions.

- We want max-warp snark :)

- It recommended this:

Based on a review of open-source LLMs fine-tuned or noted for sarcastic, witty, or "unhinged" humor in English, the standout recommendation for your setup is sweatSmile/Mistral-7B-Instruct-v0.1-Sarcasm. Here's why it's the best fit, along with details on running it.

Why This Model?

- Snark Factor: This is a LoRA-fine-tuned version of Mistral-7B-Instruct-v0.1, specifically trained on ~700 hand-picked prompt-response pairs from Reddit and Twitter focused on short-form sarcastic dialogue. It excels at generating snarky, humorous responses (e.g., roasting users or continuing ironic banter) while following instructions. It's designed for sarcasm generation (not just detection), making it ideal for chatty, irreverent English interactions. Users report it delivers punchy, context-aware wit without needing heavy prompting tweaks.

- Performance Edge Over Alternatives:

- Compared to general humor models like Mistral NeMo (12B params, praised for "unhinged" humor in creative prompts), this one is more reliably snarky out-of-the-box due to its targeted fine-tuning. NeMo is great for broad wit but requires more system prompts to dial in sarcasm.

- Other fine-tunes (e.g., Llama-2 variants for satirical headlines) are narrower or older; classifiers like DistilBERT sarcasm detectors don't generate text.

- It's Apache 2.0 licensed, fully open-source, and available on Hugging Face with a GGUF-quantized variant for easy local inference.

- Fits Your Hardware: At 7B parameters, it's already 4-bit quantized (NF4 via bitsandbytes), using ~4-5GB VRAM for the model weights alone. With a typical 2k-4k token context, KV cache adds ~2-4GB—total under 10GB on your 16GB AMD MI25 (via ROCm). No issues with offloading or swapping.

| Model | Params | Quantization | Est. VRAM (4k ctx) | Snark Style | Key Strength |

|---|---|---|---|---|---|

| sweatSmile/Mistral-7B-Sarcasm | 7B | 4-bit (NF4) | 6-8GB | Targeted sarcastic dialogue | Best for roast-y, responsive banter |

| Mistral-NeMo-Instruct | 12B | 4-bit (Q4_K_M) | 8-10GB | Unhinged, creative humor | Broader wit, but less sarcasm-focused |

| SilentMyuth/sarcastic-model | 345M | FP16 | <1GB | Basic Reddit-style sarcasm | Too small/lightweight for nuanced English |

How to Run It on Your MI25

- Setup: Install ROCm (AMD's CUDA equivalent) for MI25 support—version 5.7+ works well. Use

llama.cppor Ollama for simplest deployment (GGUF files are prepped).

- Download the GGUF: From mradermacher/Mistral-7B-Instruct-v0.1-Sarcasm-GGUF. Grab the Q4_K_M file (~4.3GB).

- With Ollama (easiest for chat):

ollama create snarky-mistral -f Modelfile(point to GGUF).- Run:

ollama run snarky-mistral. - Prompt example:

<s>[INST] Respond snarkily to this: "I just ran a marathon in under 2 hours." [/INST]</s>→ Expect something like: "Oh wow, congrats on redefining 'average'—did you stop for coffee midway?"

- With Hugging Face Transformers (for scripting):

from transformers import AutoModelForCausalLM, AutoTokenizer

import torch

model_name = "sweatSmile/Mistral-7B-Instruct-v0.1-Sarcasm"

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(

model_name,

device_map="auto", # Auto-offload to ROCm GPU

torch_dtype=torch.float16,

load_in_4bit=True, # Enforce 4-bit

bnb_4bit_compute_dtype=torch.float16

)

prompt = "<s>[INST] Roast my terrible cooking skills. [/INST]</s>"

inputs = tokenizer(prompt, return_tensors="pt").to("cuda") # Or "rocm" if needed

outputs = model.generate(**inputs, max_new_tokens=100, temperature=0.8, do_sample=True)

print(tokenizer.decode(outputs[0], skip_special_tokens=True))

- VRAM tip: Monitor with

rocm-smi. If tight, drop to 2k context or usetrust_remote_code=Truefor optimizations.

- Tips for Max Snark: Use Mistral's chat template (

[INST]tags). Temperature 0.7-0.9 for wit; lower for drier sarcasm. Fine-tune further with PEFT/LoRA if you want domain-specific roasts (e.g., tech snark).

If this doesn't hit the vibe (e.g., you want more "unhinged" over precise sarcasm), swap to Mistral-NeMo-Instruct-2407 Q4 GGUF—still fits, just prompt harder for snark. Test a few via Hugging Face Spaces first!

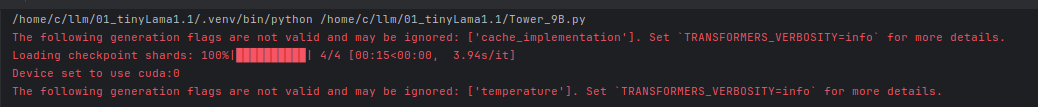

- It always takes some time to setup as it downloads all the 'shards'

- The first time it was run - it did not produce a prompt.

- We ran it again:

- And it still did not produce a prompt.

- The instructions on how to make this API enabled to listen on a port are as follows:

# Requirements:

# pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu121 # For CUDA 12.1; adjust if needed

# pip install transformers accelerate bitsandbytes sentencepiece

# pip install fastapi uvicorn # For the API server

import torch

from transformers import AutoModelForCausalLM, AutoTokenizer, BitsAndBytesConfig

from fastapi import FastAPI, HTTPException

from pydantic import BaseModel

import uvicorn

from typing import Optional

# Model name: The snarky Mistral-7B variant

model_name = "sweatSmile/Mistral-7B-Instruct-v0.1-Sarcasm"

# Quantization config: 4-bit to fit comfortably in 8GB VRAM on RTX 3060 Ti

quantization_config = BitsAndBytesConfig(

load_in_4bit=True,

bnb_4bit_quant_type="nf4",

bnb_4bit_compute_dtype=torch.float16,

bnb_4bit_use_double_quant=True # Slight accuracy boost with minimal overhead

)

# Global variables for model and tokenizer (loaded once)

model = None

tokenizer = None

# Pydantic model for request body

class PromptRequest(BaseModel):

prompt: str

max_new_tokens: Optional[int] = 150

temperature: Optional[float] = 0.8

top_p: Optional[float] = 0.9

# Load model and tokenizer at startup

def load_model():

global model, tokenizer

tokenizer = AutoTokenizer.from_pretrained(

model_name,

trust_remote_code=True,

padding_side="left",

add_eos_token=True

)

if tokenizer.pad_token is None:

tokenizer.pad_token = tokenizer.eos_token

model = AutoModelForCausalLM.from_pretrained(

model_name,

quantization_config=quantization_config,

device_map="auto", # Auto-distributes to GPU/CPU

torch_dtype=torch.float16,

trust_remote_code=True,

attn_implementation="flash_attention_2" if torch.cuda.is_available() else "eager"

)

if torch.cuda.is_available():

model = model.to("cuda")

print("Model loaded successfully!")

# FastAPI app

app = FastAPI(title="Snarky Mistral API", description="API for generating sarcastic responses")

@app.on_event("startup")

async def startup_event():

load_model()

@app.post("/generate")

async def generate_response(request: PromptRequest):

if model is None or tokenizer is None:

raise HTTPException(status_code=500, detail="Model not loaded")

# Format the prompt for Mistral Instruct

formatted_prompt = f"<s>[INST] {request.prompt} [/INST]</s>"

inputs = tokenizer(formatted_prompt, return_tensors="pt").to(model.device)

# Generate with sampling for wit

with torch.no_grad():

outputs = model.generate(

**inputs,

max_new_tokens=request.max_new_tokens,

temperature=request.temperature,

top_p=request.top_p,

do_sample=True,

pad_token_id=tokenizer.eos_token_id,

eos_token_id=tokenizer.eos_token_id

)

# Decode and extract just the generated part

full_response = tokenizer.decode(outputs[0], skip_special_tokens=True)

generated_response = full_response[len(formatted_prompt):].strip()

return {"response": generated_response}

@app.get("/health")

async def health_check():

return {"status": "healthy", "model_loaded": model is not None}

# Run the server: uvicorn main:app --host 0.0.0.0 --port 8000 --reload (for dev)

if __name__ == "__main__":

uvicorn.run(app, host="0.0.0.0", port=8000)

# Usage:

# 1. Run: python this_script.py

# 2. Query via curl: curl -X POST http://localhost:8000/generate -H "Content-Type: application/json" -d '{"prompt": "Roast my decision to buy a 3060 Ti in 2025."}'

# 3. Or use Swagger UI: Visit http://localhost:8000/docs

# VRAM: ~5-6GB on GPU; server listens indefinitely.

# For production: Use gunicorn + uvicorn workers if needed, but single worker is fine for low traffic.