LLM Workups: Running Falcon3-7b on a Minimal 3060ti Ryzen 9.

LLM Workups: Running Falcon3-7b on a Minimal 3060ti Ryzen 9.

"Grok! Write the python to test and run Falcon3-7B LLM on a 3060ti gpu where some of it is offloaded to the cpu."

- 25 seconds later..

- It should be noted that the 3060ti is pretty much the >>bottom<< of the chart for GPU for running inference models. With it's 8 GB of onboard ram a small portion of the workload will need offloading to the CPU.

import torch

from transformers import AutoTokenizer, AutoModelForCausalLM, pipeline

# Load the tokenizer and model. Assuming the model is 'tiiuae/falcon-7b'. If 'Falcon3-7B' refers to a different variant, replace the model name accordingly.

# The device_map="auto" will automatically offload layers to CPU if GPU VRAM is insufficient (e.g., on a 3060 Ti with 8GB VRAM).

model_name = "tiiuae/falcon-7b"

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(

model_name,

device_map="auto", # Automatically handles GPU/CPU offloading

torch_dtype=torch.float16, # Use FP16 for reduced memory usage

trust_remote_code=True, # Required for some custom models

offload_folder="offload" # Folder for offloaded weights if needed

)

# Create a text generation pipeline for easy inference

generator = pipeline("text-generation", model=model, tokenizer=tokenizer, device_map="auto")

# Test the model with a sample prompt

prompt = "Hello, I am testing the Falcon-7B model. What is the capital of France?"

output = generator(prompt, max_new_tokens=50, do_sample=True, top_k=50, top_p=0.95)

# Print the generated output

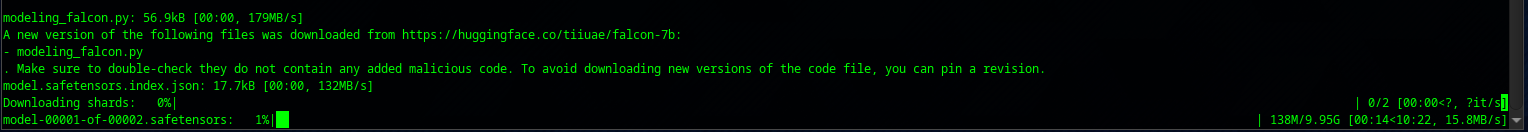

print(output[0]['generated_text'])Pulling the safe tensors was a 9.95 GB pull:

- Loading time was significant about 20 seconds.

- It should be noted that the lower the B parameter the more it hallucinates or goes off topic.

- It did work!