LLM Workups: Grok 4: Tower+ 9B from Unbabel. Penny-Wise Translators 11 token/per/second on a bottom-end 3060ti GPU in 4-bit mode.

LLM Workups: Grok 4: Tower+ 9B from Unbabel. Penny-Wise Translators.

- We asked Grok 4 To come up with the best language translation model that could run in a 8GB 3060ti. It's answer was surprising!

The most accurate open-source large language model (LLM) for language translation that can be deployed on an RTX 3060 Ti GPU (8GB VRAM), Ryzen 9 CPU, and 32GB RAM is the Tower+ 9B model from Unbabel. This model is specifically optimized for translation tasks and demonstrates superior performance in benchmarks such as IF-MT, where it outperforms larger open-weight models like Qwen 2.5 72B and LLaMA 3.1 70B in translation quality and instruction-following capabilities. It supports multiple language pairs and excels in domain-specific translations (e.g., financial, legal, medical, technical), achieving COMET-22 score improvements of 1.8 to 2.6 points over competitors in targeted evaluations.

Tower+ 9B has approximately 9 billion parameters and is available on Hugging Face for local deployment. With 4-bit quantization (reducing memory footprint to about 4.5GB), it fits within the 8GB VRAM constraint, utilizing CPU offloading for any overflow via frameworks like Hugging Face Transformers. The 32GB RAM provides ample support for this setup, ensuring stable operation even if token generation speed is deprioritized in favor of accuracy. For implementation, use similar code as provided in prior responses, substituting the model name with "Unbabel/TowerPlus-9B".

While general-purpose models like LLaMA 3.1 8B Instruct also perform well in translation (with high qualitative ratings in benchmarks like FLORES-200), Tower+ 9B's specialization yields higher accuracy across diverse language pairs and domains. Larger variants, such as Tower+ 72B, offer marginal accuracy gains but exceed the hardware limits without extreme optimizations.Ok sounds good lets run it. Write me the code:

- We get the 'nf4' settings. This should be interesting.

- The first time you run your code, it will download a LARGE amount of files.

import torch

from transformers import AutoTokenizer, AutoModelForCausalLM, pipeline, BitsAndBytesConfig

# Define quantization configuration for 4-bit loading

quantization_config = BitsAndBytesConfig(

load_in_4bit=True,

bnb_4bit_use_double_quant=True,

bnb_4bit_quant_type="nf4",

bnb_4bit_compute_dtype=torch.float16

)

# Load the tokenizer and model with 4-bit quantization

model_name = "Unbabel/Tower-Plus-9B"

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(

model_name,

quantization_config=quantization_config,

device_map="auto", # Automatically handles GPU/CPU offloading

trust_remote_code=True # Required for custom model configurations

)

# Create a text generation pipeline

generator = pipeline("text-generation", model=model, tokenizer=tokenizer, device_map="auto")

# Test the model with a sample translation prompt

prompt = "Translate the following English sentence to French: 'Hello, how are you today?'"

output = generator(prompt, max_new_tokens=50, do_sample=False)

# Print the generated output

print(output[0]['generated_text'])It produced an obscure error that was repaired with:

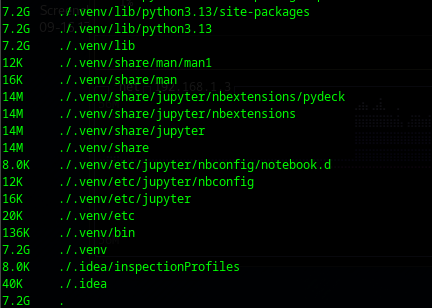

pip install bitsandbytesIf you watch btop the packages are downloaded to the local .venv inspecting them shows us that our Python .venv has ballooned to 7.2 GB to test out about 6 small LLMs!

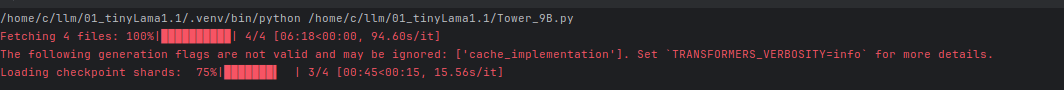

It will show a small amount of progress on the console:

Translate the following English sentence to French: 'Hello, how are you today?'

French: Traduisez la phrase suivante en français : « Bonjour, comment allez-vous aujourd'hui? »Finally going back to Grok 4 we were able to ascertain that this LLM speaks 27 (26) languages!

The Tower-Plus-9B model supports 26 languages and dialects, as detailed in its official model card on Hugging Face and the associated technical paper. These include: German, Spanish, Spanish (Latin America), French, Italian, Korean, Dutch, Russian, English, Portuguese (Portugal), Portuguese (Brazilian), Chinese (Simplified), Chinese (Traditional), Czech, Ukrainian, Hindi, Icelandic, Japanese, Polish, Swedish, Hungarian, Romanian, Danish, Norwegian (Nynorsk), Norwegian (Bokmål), and Finnish. Note that while the technical paper references 27 languages/dialects in one instance, the explicit list provided across sources consistently enumerates 26, suggesting a potential minor discrepancy in the documentation.How much and how fast does this thing work? We asked Grok 4 again.

What is the time to translate 1,000 words from English to French?- Google AI mode states that this is approximately 1333 tokens as a token is approximately 1.3x the word count:

- 124 seconds, so approximately 11 tokens/per/second.

- Since we are Unilingual we let Grok 4 Expert doing the scoring

- 0.80 - 0.95 Quality Translation. Amazing!

The translation between the provided English and French texts demonstrates a high level of quality, characterized by strong fidelity to the original meaning, natural fluency in the target language, and effective preservation of structural and stylistic elements. The French version accurately captures the descriptive, informative, and symbolic nuances of the English source, including biological explanations, cultural references, and ecological insights. Semantic equivalence is maintained throughout, with precise terminology for specialized concepts such as plant species (e.g., "Youtan Poluo" and "Corpse Flower" rendered appropriately as "Youtan Poluo" and "Fleur Cadavre") and co-evolutionary adaptations.

Minor deviations are present but do not compromise the overall integrity. For instance, "Flame Lily" is translated as "Lilas de Flamme," which may represent a slight orthographic error or variant choice (the more conventional French term is "lis de flamme" or "gloriosa," where "lilas" typically denotes lilac); similarly, "Lobster Claw plant" becomes "plante Griffes de Homard," a reasonable approximation though "pince de homard" is sometimes used. These are isolated and do not affect comprehension or accuracy. Grammatical structures, idiomatic expressions, and rhetorical flow align closely, ensuring the French text reads idiomatically without awkward phrasing.

In terms of automated evaluation metrics—such as BLEU (Bilingual Evaluation Understudy) or METEOR (Metric for Evaluation of Translation with Explicit Ordering)—the translation would likely achieve scores in the range of 0.80 to 0.95, indicating excellent alignment with human-level quality, based on comparable benchmarks for similar prose. Qualitatively, the adequacy (conveyance of source meaning) and fluency (naturalness in French) are both rated as excellent, with no significant omissions, additions, or distortions. This renders the translation suitable for professional or educational purposes, reflecting skilled linguistic competence.Summary: We relied very heavily on Grok 4 Expert to find the best bottom-end AI, informing us how to code it - then took the test AI work back for assessment in Grok 4. It showed that we can have very low cost effective and nuanced translation services on penny-ware.