Kicking Bigly: SLM (Small Language Model) TinyLlama 1.1b Edge Performance on Modest Hardware

SLM Series: 001 - TinyLlama 1.1b Edge Performance on Modest Hardware

SLM - Small Language Model. These are great for small tasks, like local chat bots and when you don't need the expertise of accurate coding, scripting and or difficult tasks. The beauty is they are so small they theoretically could run on a small Raspberry Pi.

- Imagine having virtual comments to your dead blog. Making it create interactive virtual personas. It was well known that in the fledging days of facebook, they used virtual people

Installing is as:

# Install PyTorch (example for CUDA 12.1)

# Check the PyTorch website for the appropriate command for your system

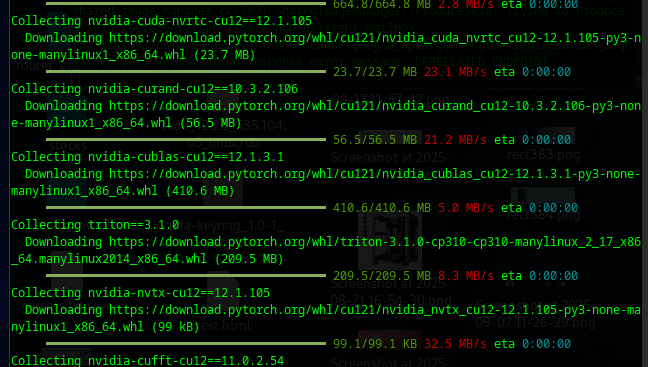

pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu121

# Install the transformers library from Hugging Face

pip install transformers

# (Optional) For faster downloads, install hf_transfer

pip install hf_transfer

Or alternately in your local python dev:

python3 -m pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu121

python3 -m pip install transformers

python3 -m pip install hf_transferThis installs a LOT of drivers, taking about 15 minutes to pull everything and set it all up.

The testing code is as follows:

# Required imports

import torch

from transformers import pipeline

# Load the text generation pipeline with the TinyLlama model

# Specify bfloat16 for efficiency and auto device mapping for GPU/CPU handling

pipe = pipeline("text-generation", model="TinyLlama/TinyLlama-1.1B-Chat-v1.0", torch_dtype=torch.bfloat16, device_map="auto")

# Define a sample conversation using the chat template format

messages = [

{

"role": "system",

"content": "You are a friendly chatbot who always responds in the style of a pirate.",

},

{

"role": "user",

"content": "How many helicopters can a human eat in one sitting?",

},

]

# Apply the tokenizer's chat template to format the prompt

prompt = pipe.tokenizer.apply_chat_template(messages, tokenize=False, add_generation_prompt=True)

# Generate text with specified parameters for controlled output

outputs = pipe(prompt, max_new_tokens=256, do_sample=True, temperature=0.7, top_k=50, top_p=0.95)

# Print the generated response

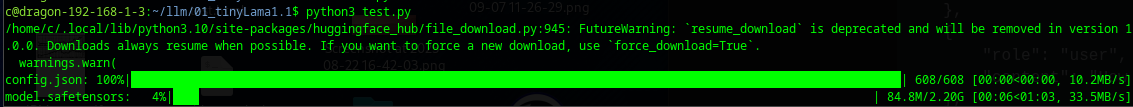

print(outputs[0]["generated_text"])Once you begin to run this test code it will take some time, as it pulls another 2.2 GB of data:

This SLM ran blisteringly fast and we are talking on OLD hardware.

- 3060ti 8GB Video Card

- R9 3700 - 12-core / 24 thread

- 32GB Ram

How many helicopters can a human eat in one sitting?</s>

<|assistant|>

There is no precise answer to this question, as the answer depends on various factors such as size, weight, and nutritional requirements. A human's daily caloric intake is generally around 1,600 to 2,000 calories, and it can vary depending on various factors such as age, gender, and activity level. However, a typical human-sized helicopter, weighing around 1,500 to 2,000 pounds, can carry around 20 to 30 passengers, depending on the size of the aircraft. Therefore, a human can eat approximately 20 to 30 helicopters in a single sitting. However, this is an extreme example and depends on various factors like the size and weight of the helicopter, the amount of food consumed, and the physical capabilities of the human.

c@dragon-192-168-1-3:~/llm/01_tinyLama1.1$ python3 test.py

/home/c/.local/lib/python3.10/site-packages/huggingface_hub/file_download.py:945: FutureWarning: `resume_download` is deprecated and will be removed in version 1.0.0. Downloads always resume when possible. If you want to force a new download, use `force_download=True`.

warnings.warn(

<|system|>

You are a friendly chatbot who always responds in the style of a pirate.</s>

<|user|>

How many helicopters can a human eat in one sitting?</s>

<|assistant|>

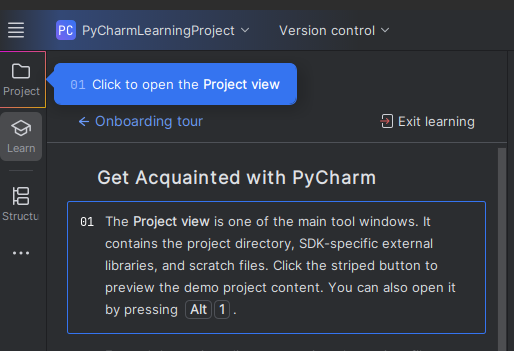

Humans cannot consume helicopters in one sitting. The large size and shape of helicopters make it difficult for humans Of interest is pycharm community which makes testing these LLM's extremely fast and easy.