Linux/LLM Speed Bandwidth Testing mbw / nvbandwidth

The #1 limiting factor if your LLM will run quickly or not is memory bandwidth.

- Memory Bandwidth of your RAM.

- Memory Bandwidth of your GPU.

- How quickly data can get between the GPU and the CPU over your very slow PCIe bus.

- Memory Bandwidth of your RAM.

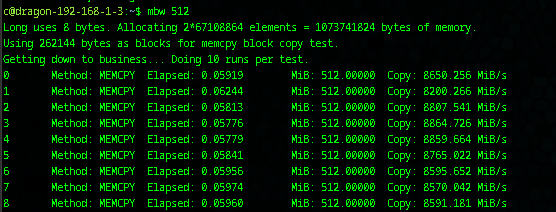

- In Linux we can test this quickly using the mbw tool

sudo apt install mbw -yOnce you have it installed, you can quickly run it with simply:

mbw 512Will run a generic 10 tests.

We have very slow in terms of LLM memory at only 8.5-15.5 GB/s bandwidth.

2. Memory Bandwidth From your CPU to your GPU.

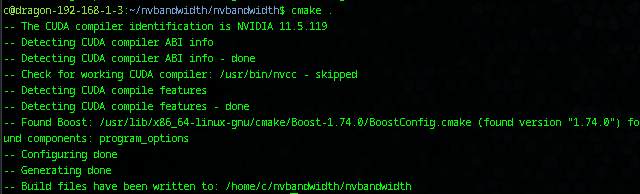

We can now move onto testing our CPU to GPU or PCIe bandwidth set, using nvbandwidth.

You will be required to install some pre-tools - including nvcc.

sudo apt update -y

sudo apt install git build-essential libboost-program-options-dev cmake -y

sudo apt install nvidia-cuda-toolkit

Once you have the support build tools, you can then install nvbandwidth:

git clone https://github.com/NVIDIA/nvbandwidth.git ;

cd nvbandwidth ; cmakeIf it went cleanly it will look like:

Alternately you can set:

cmake -DMULTINODE=1 .There was an error that required editing testcase.cpp as follows to set:

- Comment out line 61

- Add below it 'supprtsMulticast = 1;

// CU_ASSERT(cuDeviceGetAttribute(&supportsMulticast, CU_DEVICE_ATTRIBUTE_MULTICAST_SUPPORTED, dev));

supportsMulticast = 1; After this, one then calls the installation script as the simplest and cleanest way to get it running:

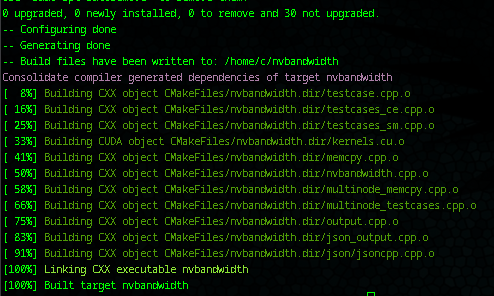

sudo ./debian_install.shYou should get a build like:

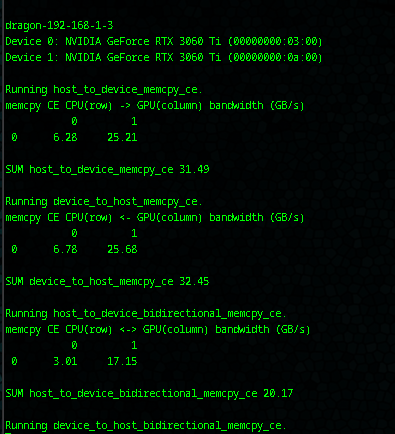

Running it with no options will automatically query any and all GPU's in your system and benchmark them in various configurations, making tests from GPU - GPU, GPU-CPU, CPU-GPU, there are actually 35 different tests you can run (shown below)

We can see our information for both video cards, showing VERY SLOW performance on the one card:

Device 0: NVIDIA GeForce RTX 3060 Ti (00000000:03:00)

Device 1: NVIDIA GeForce RTX 3060 Ti (00000000:0a:00)

Running device_to_host_memcpy_ce.

memcpy CE CPU(row) <- GPU(column) bandwidth (GB/s)

0 1

0 6.78 25.68

- Card 0 - 6.78 GB/s / Card 1 - 25.68 GB/s!? How! Why?!

- The result is from a generic consumer low-end motherboard that gives you one fast PCI-e port never expecting you to run more than one GPU. But this right here is the major cause of bottlenecks in your home LLM - your lack of PCIe!

We can get the motherboard without walking over to the computer by simply:

sudo dmidecode -t baseboardWill give us all our statistics on this basic motherboard:

Handle 0x0002, DMI type 2, 15 bytes

Base Board Information

Manufacturer: ASUSTeK COMPUTER INC.

Product Name: TUF GAMING X570-PLUS

Version: Rev X.0x

Serial Number: 191060782600654

Asset Tag: Default string

Features:

Board is a hosting board

Board is replaceable

Location In Chassis: Default string

Chassis Handle: 0x0003

Type: Motherboard

Contained Object Handles: 0

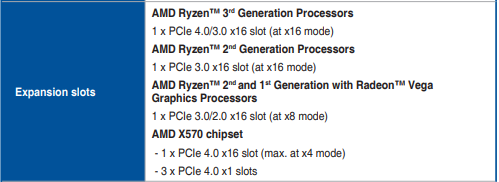

At this point you actually pretty much need to pull the manual for your motherboard and really check out your specs:

Using the following speed table we can get a quick idea what we are dealing with:

PCIe Speed Table

The following table outlines the maximum theoretical speeds for different generations of PCI Express (PCIe) based on the number of lanes. Each generation doubles the data transfer rate compared to the previous one.

PCIe Speed by Generation and Lane Count

| PCIe Generation | Year Released | Data Transfer Rate | Bandwidth (x1) | Bandwidth (x16) |

|---|---|---|---|---|

| PCIe 1.0 | 2003 | 2.5 GT/s | 250 MB/s | 4.0 GB/s |

| PCIe 2.0 | 2007 | 5.0 GT/s | 500 MB/s | 8.0 GB/s |

| PCIe 3.0 | 2010 | 8.0 GT/s | 1.0 GB/s | 16.0 GB/s |

| PCIe 4.0 | 2017 | 16.0 GT/s | 2.0 GB/s | 32.0 GB/s |

| PCIe 5.0 | 2019 | 32.0 GT/s | 4.0 GB/s | 64.0 GB/s |

| PCIe 6.0 | 2021 | 64.0 GT/s | 8.0 GB/s | 128.0 GB/s |

Key Points

- Lanes: PCIe connections can have different lane configurations (x1, x4, x8, x16).

- Backward Compatibility: Newer PCIe generations are generally backward compatible with older versions.

- Real-World Performance: Actual speeds may be about 15% lower due to system overhead and other factors.

In our case yes - we have 1 at roughly 25 GB/s and one at 6.78 GB/s ? - So clearly we have quite a bottleneck, and yes this is definitely going to significantly effect performance.

- We are looking at 1 slot giving us a 'gaming performance' of ~ 25 GB/s and one slot running PCIe 3.0 x 8 most likely. We can actually look this all up with a bit of inspection.

lspci | grep NVIDIAWill give us:

03:00.0 VGA compatible controller: NVIDIA Corporation GA104 [GeForce RTX 3060 Ti Lite Hash Rate] (rev a1)

03:00.1 Audio device: NVIDIA Corporation GA104 High Definition Audio Controller (rev a1)

0a:00.0 VGA compatible controller: NVIDIA Corporation GA104 [GeForce RTX 3060 Ti] (rev a1)

0a:00.1 Audio device: NVIDIA Corporation GA104 High Definition Audio Controller (rev a1)

Note the numbers, we can use them to get more information, to inspect the first card:

lspci -s <bus:device.function> # So

sudo lspci -s 03:00.0 -vvv- This must be run as sudo, you can get some information but not the link caps:

LnkCap: Port #0, Speed 16GT/s, Width x16, ASPM L0s L1, Exit Latency L0s <512ns, L1 <4usWhen we check out second card we can see also:

lspci -s 0a:00.0 -vvvAnd our link cap is:

LnkCap2: Supported Link Speeds: 2.5-16GT/s, Crosslink- Retimer+ 2Retimers+ DRS-

Clearly this one cannot be the 24 GB/s as it doesn't even support it, again it can also be the motherboard.

We can carry on from here, but the end result is simply that we do not have industrial grade equipment so do not expect industrial grade performance... But for a home LLM on a miniscule budget - it is respectable.

Part 2: Internal Inspection of nvbandwidth:

- There are actually a large number of tests that nvbandwidth supports

nvbandwidth --list0, host_to_device_memcpy_ce:

1, device_to_host_memcpy_ce:

2, host_to_device_bidirectional_memcpy_ce:

3, device_to_host_bidirectional_memcpy_ce:

4, device_to_device_memcpy_read_ce:

5, device_to_device_memcpy_write_ce:

6, device_to_device_bidirectional_memcpy_read_ce:

7, device_to_device_bidirectional_memcpy_write_ce:

8, all_to_host_memcpy_ce:

9, all_to_host_bidirectional_memcpy_ce:

10, host_to_all_memcpy_ce:

11, host_to_all_bidirectional_memcpy_ce:

12, all_to_one_write_ce:

13, all_to_one_read_ce:

14, one_to_all_write_ce:

15, one_to_all_read_ce:

16, host_to_device_memcpy_sm:

17, device_to_host_memcpy_sm:

18, host_to_device_bidirectional_memcpy_sm:

19, device_to_host_bidirectional_memcpy_sm:

20, device_to_device_memcpy_read_sm:

21, device_to_device_memcpy_write_sm:

22, device_to_device_bidirectional_memcpy_read_sm:

23, device_to_device_bidirectional_memcpy_write_sm:

24, all_to_host_memcpy_sm:

25, all_to_host_bidirectional_memcpy_sm:

26, host_to_all_memcpy_sm:

27, host_to_all_bidirectional_memcpy_sm:

28, all_to_one_write_sm:

29, all_to_one_read_sm:

30, one_to_all_write_sm:

31, one_to_all_read_sm:

32, host_device_latency_sm:

33, device_to_device_latency_sm:

34, device_local_copy: << - This one measures internal GB/s!So to see the internal GB/s Bandwidth of the video card is actually test 34:

./nvbandwidth -t 34We see the true speed results internal to the card.

Device 0: NVIDIA GeForce RTX 3060 Ti (00000000:03:00)

Device 1: NVIDIA GeForce RTX 3060 Ti (00000000:0a:00)

Running device_local_copy.

memcpy local GPU(column) bandwidth (GB/s)

0 1

0 207.27 207.21

SUM device_local_copy 414.48

NOTE: The reported results may not reflect the full capabilities of the platform.

Performance can vary with software drivers, hardware clocks, and system topology.

Summary - This just really shows you where you bottlenecks can sit. Even through the nvidia 3060ti card (now considered a very basic baseline card) can have internal memory bandwidth of "448 GB/s", when an LLM is inferencing across a very slow PCIe 3.0 x 8 you will loose a LOT of your speed.

This is why 48 GB and 96GB video cards are astronomically priced because they allow an entire LLM to run without getting out onto the clogged and super relatively slow PCIe buses.

The TRUE speed of the 3060ti was only 207 GB/s not even close to various claimed results of 336/448 GB/s. You can see on this thread that NVIDIA puts theoretical performance results on their cards, not actual typical real performance results! Once you know this, the meat and potatoes of what you are really getting out of your GPU's, your CPU's and your LLM's can hedge accordingly.

The most important purchase you can buy to safe-proof your LLM for future is to start with a motherboard with true PCIe 4.0 x 16 over all 4 slots! That throws a lot of people because that needs to be a server, and usually starts at $1200 alone.