docker: system: Automatic Container Backups

In this guide we go through the steps of an automatic docker container backup across two Linux systems.

So you have your docker up and running, and you need to make some container backups automatically (in case zombies wipe out the server farm. In today's world you never know!) Here is a four-part guide to make this as automatic as you like.

- Backup Server will automatically ssh into the production server.

- Backup Server will call production server to build container to .tar images.

- Backup Server will pull .tar files back to itself.

- This will all run on a timer (cron).

Part 1. Backup Server will automatically ssh into the production server.

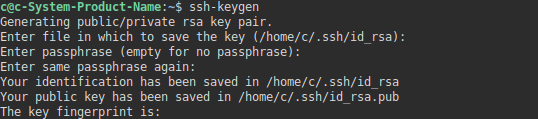

- This is not too much setup. From the backup server - as any user, make some keys.

ssh-keygen

And then copy it to your root account of your production machine.

ssh-copy-id root@<ip> -p <port>Once you have successfully done this you want to test that ssh can automatically login and execute a command. In our case we are going to get it to give us back the present working directory.

ssh -p <port> root@<ip> 'pwd'Part 2. Production Server Takes Snapshots of all Docker Containers:

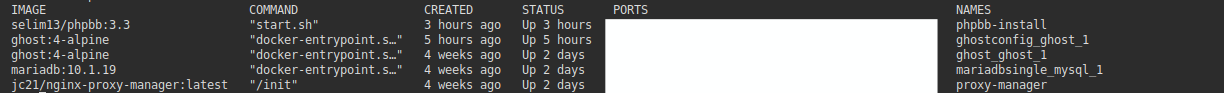

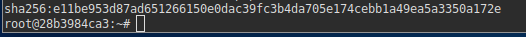

One can test this from the command line:

docker commit phpbb-install

And then we can compile a script to do them all automatically letting the backup server initiate the call:

ssh -p <port> root@<ip> 'docker commit phpbb-install'

ssh -p <port> root@<ip> 'docker commit ghostconfig_ghost_1'

ssh -p <port> root@<ip> 'docker commit ghost_ghost_1'

ssh -p <port> root@<ip> 'docker commit mariasingle_mysql_1'

ssh -p <port> root@<ip> 'docker commit proxy-manager'We can save this inside the backup server say in backup.sh and then make it executable, we will test it next.

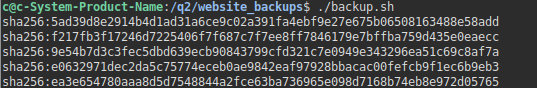

chmod +x backup.shWe test it out and it runs really quickly actually:

Now the heavy lifting we will get docker to make .tar files out of all the containers and add to our script:

- We could use a for in loop with sed, but to keep it objectively simple for the learner we will keep the scripts as simple as possible. Sed can be confusing!

ssh -p <port> root@<ip> 'docker commit phpbb-install'

ssh -p <port> root@<ip> 'docker commit ghostconfig_ghost_1'

ssh -p <port> root@<ip> 'docker commit ghost_ghost_1'

ssh -p <port> root@<ip> 'docker commit mariasingle_mysql_1'

ssh -p <port> root@<ip> 'docker commit proxy-manager'

ssh -p <port> root@<ip> 'docker container export phpbb-install > phpbb-install.tar'

ssh -p <port> root@<ip> 'docker container export ghostconfig_ghost_1 > ghostconfig_ghost_1.tar'

ssh -p <port> root@<ip> 'docker container export ghost_ghost_1 > ghost_ghost_1.tar'

ssh -p <port> root@<ip> 'docker container export mariasingle_mysql_1 > mariasingle_mysql_1'

ssh -p <port> root@<ip> 'docker container export proxy-manager > proxy-manager.tar'- Always test your scripts frequently and take small incremental steps.. Here is our output. We found out quickly that container export is SLOW. This part actually took quite a while.

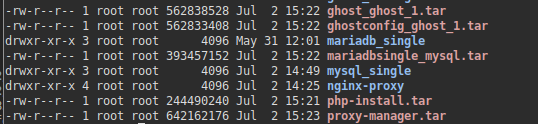

- Because our default pwd (Present working directory) for root is /root we can check there and the .tar files are there!

Part 3. Backup Server will Automatically Pull .tar files to itself.

After this it is only a matter of appending your backup.sh with a scp (secure copy). The period (.) means put this here in front of <me> since the script runs out of the backup directory it will pull it and put it right there.

Note: For whatever non-consistent reason (Port) option in scp is -P while in ssh it is lowercase -p

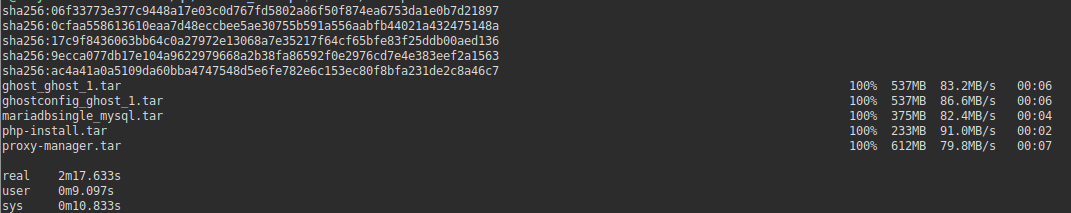

scp -P <port> root@<ip>:/root/*.tar .When it is all done we time the script to see how long a full container backup takes:

time ./backup.sh

Some notes:

- 2GB of containers (4) backed up from a small VM with only 2 cores completed in about 2 1/2 minutes roughly.

- nginxy-proxy is rediculously big - does it need 642 MB just to proxy a couple sites?

- ghost blogs seem to have almost identical sizes at about 562 MB

- maridb server came in at 393MB.

- Commit was clean and seamless. I was able to run this backup script while writing this blog and saving it and it just works.

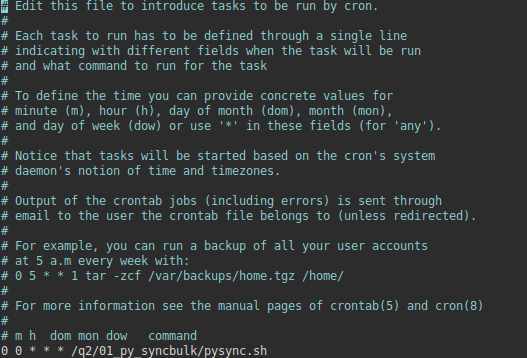

Part 4. Automating the backup by adding a cron job.

It is simply a matter of adding a crontab option and then having it run on a schedule (daily) is recommended. crontab.guru is recommended (and explains the format).

One can edit the crontab for root with:

sudo crontab -e -u rootAn example crontab:

Once you have added in your script it is only a matter of waiting for it to execute.